This blog was originally published on Lorenn Ruster’s website.

Building new AI tools can be exciting and rewarding — finally solving a problem that may have plagued your organisation for a long time and will unlock newfound efficiencies and insights. But as a data scientist or developer, it can also be daunting once you realise your power: the tech you’re shaping is shaping behaviour and, at scale, could have profound impacts. Recognition of this responsibility can be paralysing when you take a step back and think about how the thing you built connects to a range of wider implications. A range of questions can ensue….

For Paola Oliva-Altamirano, Director of Data Science at Our Community Innovation Lab, building Artificial Intelligence (AI) tools ‘ethically’ is a high priority. But what does that really mean in practice? Principles like dignity are really important, but nebulous. What can Paola use, in an organisation with limited time and resources, to really interrogate the extent to which a tool she stewards enables dignity? Not just in the outcome, but also in the development process.

These were just some of the questions that were swirling around for Paola in 2021, after she had built CLASSIEfier — an automated grant classification tool for the Smarty Grants platform.

For Lorenn Ruster, Responsible Tech Collaborator at Centre for Public Impact and PhD Candidate at the Australian National University’s School of Cybernetics, these are are fascinating questions at the heart of her PhD which looks at conditions for enabling dignity-centred AI development.

This post shares a bit on a chance collaboration between Paola and Lorenn. You can read more on applying the Dignity Lens to CLASSIEfier in this whitepaper.

Read more in our whitepaper here.

On how it started: Lorenn#

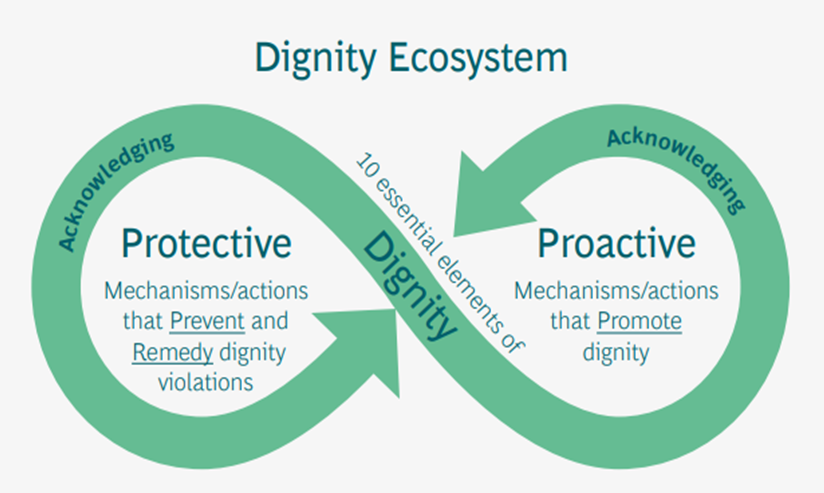

In 2021, I completed a project as a part of my Masters of Applied Cybernetics program, working with Thea Snow at the Centre for Public Impact to develop a view of dignity as an ecosystem (see Figure 1), building on the work of Dr. Donna Hicks.

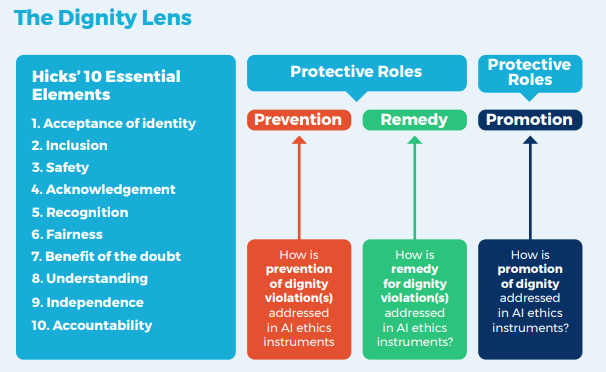

We turned our findings into a Dignity Lens (an analytic tool, see Figure 2) and we applied the Dignity Lens to the AI ethics instruments found in the governments of Australia, Canada and the UK. You can read more about it here in this whitepaper.

We were interested in how else we could apply, iterate and improve on the Dignity Lens, particularly in other contexts. And so, when Paola Oliva-Altamirano, Director of Data Science at Our Community, approached us to apply the Dignity Lens to CLASSIEfier — an automated classification tool developed to classify the over 1.5 million grants stored on the Smarty Grants database — we were thrilled!

What ensued from there is a test case of using the Dignity Lens to retrospectively analyse the decisions made throughout the AI development lifecycle of CLASSIEfier. And in doing so, an understanding of the extent to which dignity is protected and/or promoted through the decisions made during its development.

On how it started: Paola#

Last year I went on a journey of finding a way to document the work that we did while developing CLASSIEfier, a grants auto-classificiation tool.

I had many questions like:

- Have we done the right thing?

- Have we done everything we could for this tool to be as ethical as possible?

- How did we mitigate as many biases as possible?

- How do we really have these conversations as an organisation?

I hunted around for something we could use to think through this further and quickly became bamboozled — so many options, but none really speaking to what I was looking for.

Then we found a fantastic ethical framework developed by Lorenn Ruster and the Centre for Public Impact. We used the Dignity Lens retrospectively as a way to understand how our auto-classification tool enabled human dignity. Lorenn and I have been working together to see her framework in action and to track our tool through every stage of development.

On what was learned: Lorenn#

The experience enabled me to iterate on the Dignity Lens, adding stages of the AI development lifecycle to it. Paola and I also reflected together on the power of using the Dignity Lens in this way and found that the Dignity Lens:

- gave Our Community confidence that they were overtly considering dignity and provided a way of documenting decisions to continually improve;

- enabled a common language for discussion and debate on what to preference and why; and

- highlighted elements of dignity that had not previously been considered and sparked ideas on how to achieve further balance between protective and proactive mechanisms across all stages of AI development.

(You can read more detail about our combined reflections in the whitepaper).

On what was learned: Paola#

I learned a lot of things along the way; these two resonate:

- If we are protecting human dignity, we need to ask the question, the dignity of whom? The user, the data owner, the people represented in the data, the developer — every decision plays a different role to the stakeholders involved.

- The process would have been faster if I would have had this framework in mind since the beginning. It would have steered me on the right direction before running in circles. I’m now considering how I would include this framework in the upfront planning and design process of any future tools we develop.

Take a look at the whitepaper for further details, including analysis tables where the decisions made by the Our Community team in developing CLASSIEfier are mapped to Hicks’ 10 essential elements of dignity, the stage of the AI development lifecycle and whether they are mechanisms that protect and/or promote dignity.

If you or your organisation are also looking for ways to retrospectively analyse decisions made or prospectively design with dignity in mind, we’d love to hear from you!

_Paola Oliva-Altamirano is the Director of data science at Our Community. A research scientist trained in astrophysics, Paola designs algorithms to improve understanding of social sector data, with the goal of facilitating human-centred artificial intelligence (AI) solutions. She is actively involved in AI ethics discussions at the Innovation Lab and as a member of the Standards Australia AI Committee. Paola graduated in physics in Honduras and later completed a PhD and post-doc in astrophysics at Swinburne University of Technology, Melbourne. In 2016 she co-founded Astrophysics in Central America and the Caribbean (Alpha-Cen) to support students in developing countries pursuing careers in science. She is currently the organisation’s vice-president. Paola has been with the SmartyGrants Innovation Lab since 2018.

Lorenn Ruster is a social-justice driven professional and systems change consultant. Currently, Lorenn is a PhD candidate at the Australian National University’s School of Cybernetics and a Responsible Tech Collaborator at Centre for Public Impact. Previously, Lorenn was a Director at PwC’s Indigenous Consulting and a Director of Marketing & Innovation at a Ugandan Solar Energy Company whilst a Global Fellow with Impact Investor, Acumen. She also co-founded a social enterprise leveraging sensor technology for community-led landmine detection whilst a part of Singularity University’s Global Solutions Program. Her research investigates conditions for dignity-centred AI development, with a particular focus on entrepreneurial ecosystems._