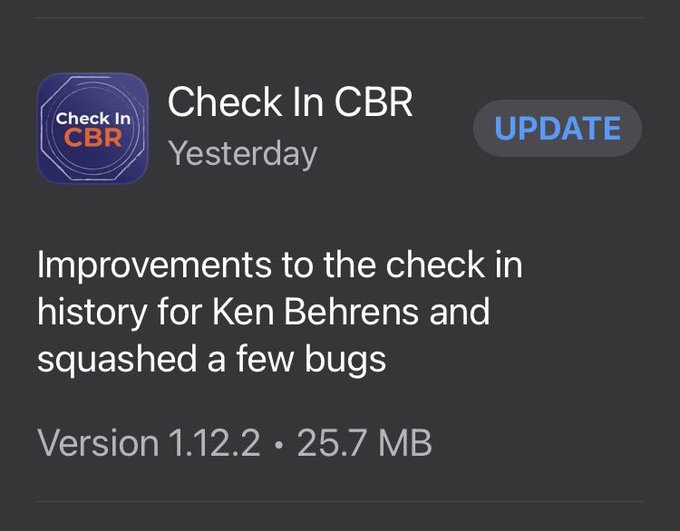

Around August last year, during Canberra’s second COVID-19 lockdown, the name “Ken Behrens” began appearing all over Canberra; in traffic signage, in bumper stickers – and even in the ACT COVID-19 check-in app’s bug report. Who was this mysterious Ken Behrens, and why was their name suddenly ubiquitous?

You might already know one origin story of Ken Behrens; that during a live-transcribed press conference providing updates on the pandemic, ACT Chief Minister Andrew Barr expressed gratitude to his constituents for their compliance with public health measures by saying “Good job, Canberrans”. However, the live transcription software predicted that Barr said, “Good job, Ken Behrens”. The transcriptionist, unfortunately, didn’t catch it in time. The erroneous caption was broadcast live – and the #KenBehrens meme was born. But this error isn’t the fault of the transcriptionist.

There’s an untold story behind this seemingly innocuous mis-transcription.

Good job Ken Behrens pic.twitter.com/lZ8w8Jnz4U

— arwon (@arwon) August 13, 2021

Ken Behrens isn’t just a Canberra meme – or a Madagascar-based wildlife photographer. “Ken Behrens” is the visible manifestation of a more insidious problem; one that is systemic and structural. To understand how “Canberrans” was transcribed to “Ken Behrens”, we must dig deeper, and untangle how voice technologies like speech recognition work – and how they don’t work well for everyone. Cybernetics as a discipline gives us those tools, helping us ask incisive question about a system’s origin, its boundaries, actors, interactions and emergent behaviour. Moreover, cybernetics helps us to formulate impactful interventions in those systems – helping us to create a world we want to live in.

Ken Behrens is a cybernetic detective story.

Many of us use voice technologies. You might use a voice assistant in your home to play music, or an assistant on your mobile device to control your lights or thermostat, or you might use the console in your car to get directions. Voice and speech recognition technologies are becoming ubiquitous – they are increasingly used as components in myriad information infrastructures. Indeed, industry predictions estimate that by next year, there will be more than 8 billion voice assistant devices in use – more than one for each person on the planet (Ammari et al., 2019; Dale, 2020; Kinsella & Mutchler, 2020a, 2020b).

A key component of voice assistant technologies is speech recognition, which takes spoken audio and predicts the written transcription of what the speaker said. Transcription software, such as that used by producers of press conferences, also uses speech recognition algorithms. Speech recognition uses machine learning (ML) - a statistical approach to identifying patterns in large volumes of data – to predict the written characters and words from an audio file (Deng & Li, 2013; J. Li et al., 2015; Russell & Norvig, 2021).

Live transcriptions do this prediction in “real-time” – as the speaker is talking. There’s often very little time to correct errors in the transcription before it is broadcast. Online transcription services - such as those you might have used for transcribing interviews during research – perform this prediction in bulk and then notify you when it’s ready. In this scenario, there’s often more time for a human transcriber to validate the machine learning algorithm’s prediction.

While the speech recognition machine learning algorithms used by transcription companies vary, they share one important element; they are all trained on data. There are two types of data in a speech recognition dataset; audio files containing the speaker saying a phrase – called an utterance – and a matching written sentence, called the transcription. Speech recognition data is often expensive to acquire, and so many companies use open source – freely available – datasets – to build their ML models. Some of the most common ones include Common Voice by Mozilla, and Librispeech (Ardila et al., 2019; Panayotov et al., 2015). All speech recognition data comes from somewhere – it has provenance (Buneman et al., 2001). A dataset’s provenance is often described using metadata. For example, metadata standards have existed for decades for describing the provenance of material held in archives and libraries (Deelman et al., 2009; Zeng & Qin, 2016). Metadata standards for describing voice and speech data don’t exist yet, although there are nascent efforts to better describe the provenance of data used for speech recognition and natural language processing (Bender & Friedman, 2018; Gebru et al., 2018). So, data is expensive, and we don’t always know where it comes from.

Those points become important as we explore how speech recognition algorithms work. There are a slew of speech recognition algorithms, however they generally share two common components; an acoustic model which recognises the sounds that are being spoken, and predicts characters based on sounds; and a language model which takes those characters and predicts words. The acoustic model uses the utterance-transcription pairs to make predictions. How well it predicts the right character for a sound depends on whether that sound and transcription is included frequently in the training data. The language model is trained on a large collection of sentences, known as a corpus. How well the language model predicts the correct word from given characters depends significantly on whether that word appears frequently in the corpus (Scheidl et al., 2018; Seki et al., 2019; Zenkel et al., 2017).

Now that we know about the data used for acoustic and language models, we have enough information about the components of speech recognition systems to make informed speculation around what may have transpired to create the “Ken Behrens” mis-transcription.

Let’s take the acoustic model first. Each spoken language is made up of building blocks of sound – called phonemes. Phonemes don’t just vary by language; they also vary by accent. For example, consider the way that a person with an American accent [1] says the word “vase” – it rhymes with “ways”. A person with an Australian accent says the word “vase” to rhyme with “paths”. Similarly, American-accented people tend to pronounce “Melbourne” as “mel-BORNE”, whereas Australian-accented folks say “MEL-bun”. So, if the data used for training the acoustic model has lots of American accents, it will work more accurately for American-accented speakers. Consider how American-accented people pronounce “Canberra”. It’s more “can-BEH-ra” than “CAN-BRA”.

Next, let’s look at the language model. A language model covers a domain – or a set of vocabulary. The vocabulary reflects the phrases that are expected to be encountered where the speech recognition model is deployed. For example, if you’re using speech recognition for medical transcription, you would expect the language model to cover medical terminology. If a word is not in the language model, it is considered to be out of vocabulary (OOV). While there are methods available to guess at OOV words by predicting parts of words (like “pre-” or “-ing” suffixes and prefixes), OOV words are less likely to be predicted (Bazzi, 2002; Chen et al., 2019). One group of words that is often OOV are named entities – people, products and places. A whole subfield of natural language processing (NLP) exists around named entity recognition (Galibert et al., 2011; C. Li et al., 2021; Mao et al., 2021; Yadav & Bethard, 2019).

The word “Canberra” for example, is a named entity – a place name derived from the now-sleeping Ngunnawal Indigenous language (Frei, 2013). Because Canberra is a capital city, it will occur frequently in written documents – such as Wikipedia articles, news clippings, academic papers – upon which language models are trained. However, the word “Canberran” is a demonym; a word used to describe inhabitants of a particular location – like “Melbournian” or “Taswegian”. While “Canberra”, being a capital city, is highly likely to be in the language model, “Canberran” is less likely to be. For example, this n-gram plot from Google Books shows the frequency of occurrence of “Canberra” and “Canberran” over time. “Canberran” is far less frequent than “Canberra”.

This sheds more light on our cybernetic detective story. Now, it’s easier to see why a mis-transcription like “Ken Behrens” could happen. Firstly, because of challenges with data provenance, the person building the machine learning model may not know where the data in the training set comes from, or the characteristics of the people speaking in it. The accents they speak with may not be labelled, so it makes it more difficult to “balance” the dataset – or ensure that a mix of accents are included in the training. This means that the acoustic model could be trained on accents that don’t sound like Andrew Barr’s Australian accent. The acoustic model then could foreseeably predict the letters “k-e-n” for “Can” and “b-e-r-e-n-s” for “berrans” – if this better matches the data it was trained on. Now, if we add a language model on top of that, and “Canberrans” is out of vocabulary, the language model will predict a word that sounds similar – like “Ken Behrens” – which might be in the vocabulary as he is a famous photographer.

By using cybernetic principles to explore systems, we can see that they cross cultural, technical and natural components. The “Ken Behrens” meme is much more than an amusement; it’s a visible manifestation of shortcomings and inequalities in the everyday systems that are intended to increase accessibility to information – such as live transcriptions.

The turn to data-centric machine learning#

So, how might we intervene in this system?

The challenge represented here is related to a growing movement called “data-centric machine learning”. In recent years, research efforts have focused on making machine learning models more accurate, with new architectures, algorithms and more powerful hardware. In contrast, the data-centric movement sees data as the place to intervene within machine learning systems, with a focus on ensuring the provenance and characteristics of the data used for model training are known, explainable and well-documented (Anik & Bunt, 2021; Hutchinson et al., 2021; Paullada et al., 2020; Sambasivan et al., 2021).

A data-centric approach to speech recognition algorithms might involve ensuring that the provenance of all data used for training is known in detail; that the training datasets have accompanying dataset documentation such as data sheets or data statements; and that the trained model is evaluated against multiple axes of variation – such as accent and domain – to allow for future improvement. Ensuring that demonyms and named entities specific to the local context in which the speech recognition model is deployed – such as “Canberrans” – is also likely to have helped in this case.

Cybernetics has a role to play in this movement, too. A key focus of our discipline is understanding the components of a system, their historical lineage, their interactions, and the behaviour that emerges from the interactions themselves. Ken Behrens is certainly one example of such emergent behaviour!

So next time you see a mis-transcription or a meme, think like a cyberneticist – and ask “how did various components of a system interact to yield this outcome”?

For professional integrity, the company undertaking live transcription for the Andrew Barr press conference, AI Media, was contacted in regard to this essay, and I thank them for their feedback and engagement.

If you enjoyed this essay, you might enjoy the Social Responsibility of Algorithms workshop. #AFPLsra22 is hosted by the ANU School of Cybernetics in conjunction with the ANU Centre for European Studies, ANU Fenner School of Environment and Society, CNRS LAMSADE and DIMACS, with the support of the Erasmus+ Programme of the European Union.

And if you think that Cybernetics might be for you, be sure to check out the School of Cybernetics education programs.

School of Cybernetics education programs

Footnotes#

- I use the term “person with an American accent” because not all people who are of American nationality speak with an American accent. And some people who speak with an American accent are naturalised citizens of other countries. This phrasing serves to disentangle nationality from accent.

Reference list#

Ammari, T., Kaye, J., Tsai, J. Y., & Bentley, F. (2019). Music, Search, and IoT: How People (Really) Use Voice Assistants. ACM Transactions on Computer-Human Interaction (TOCHI), 26(3), 17.

Anik, A. I., & Bunt, A. (2021). Data-Centric Explanations: Explaining Training Data of Machine Learning Systems to Promote Transparency. Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, 1–13.

Ardila, R., Branson, M., Davis, K., Henretty, M., Kohler, M., Meyer, J., Morais, R., Saunders, L., Tyers, F. M., & Weber, G. (2019). Common Voice: A Massively-Multilingual Speech Corpus. ArXiv Preprint ArXiv:1912.06670.

Bazzi, I. (2002). Modelling out-of-vocabulary words for robust speech recognition [PhD Thesis]. Massachusetts Institute of Technology.

Bender, E. M., & Friedman, B. (2018). Data statements for natural language processing: Toward mitigating system bias and enabling better science. Transactions of the Association for Computational Linguistics, 6, 587–604.

Buneman, P., Khanna, S., & Wang-Chiew, T. (2001). Why and where: A characterization of data provenance. International Conference on Database Theory, 316–330.

Chen, M., Mathews, R., Ouyang, T., & Beaufays, F. (2019). Federated learning of out-of-vocabulary words. ArXiv Preprint ArXiv:1903.10635.

Dale, R. (2020). Voice assistance in 2019. Natural Language Engineering, 26(1), 129–136.

Deelman, E., Berriman, B., Chervenak, A., Corcho, O., Groth, P., & Moreau, L. (2009). Metadata and provenance management. In Scientific data management (pp. 465–498). Chapman and Hall/CRC.

Deng, L., & Li, X. (2013). Machine learning paradigms for speech recognition: An overview. IEEE Transactions on Audio, Speech, and Language Processing, 21(5), 1060–1089.

Frei, P. (2013). Discussion on the Meaning of “Canberra” (https://web.archive.org/web/20130927182307/http://www.canberrahistoryweb.com/meaningofcanberra.htm). Canberra History Web; Web Archive. http://www.canberrahistoryweb.com/meaningofcanberra.htm

Galibert, O., Rosset, S., Grouin, C., Zweigenbaum, P., & Quintard, L. (2011). Structured and extended named entity evaluation in automatic speech transcriptions. Proceedings of 5th International Joint Conference on Natural Language Processing, 518–526.

Gebru, T., Morgenstern, J., Vecchione, B., Vaughan, J. W., Wallach, H., Daumé III, H., & Crawford, K. (2018). Datasheets for datasets. ArXiv Preprint ArXiv:1803.09010.

Hutchinson, B., Smart, A., Hanna, A., Denton, E., Greer, C., Kjartansson, O., Barnes, P., & Mitchell, M. (2021). Towards accountability for machine learning datasets: Practices from software engineering and infrastructure. Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency, 560–575. https://doi.org/10.1145/3442188.3445918

Kinsella, B., & Mutchler, A. (2020a). In-car voice assistant consumer adoption report. Voicebot.AI. https://voicebot.ai/wp-content/uploads/2020/02/in_car_voice_assistant_consumer_adoption_report_2020_voicebot.pdf

Kinsella, B., & Mutchler, A. (2020b). Smart Speaker Consumer Adoption Report 2020. Voicebot.AI. https://research.voicebot.ai/report-list/smart-speaker-consumer-adoption-report-2020/

Li, C., Rondon, P., Caseiro, D., Velikovich, L., Velez, X., & Aleksic, P. (2021). Improving Entity Recall in Automatic Speech Recognition with Neural Embeddings. ICASSP 2021-2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 7353–7357.

Li, J., Deng, L., Haeb-Umbach, R., & Gong, Y. (2015). Robust automatic speech recognition: A bridge to practical applications.

Mao, T., Khassanov, Y., Xu, H., Huang, H., Wumaier, A., Chng, E. S., & others. (2021). Enriching Under-Represented Named Entities for Improved Speech Recognition. 2021 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), 1021–1025.

Panayotov, V., Chen, G., Povey, D., & Khudanpur, S. (2015). Librispeech: An asr corpus based on public domain audio books. 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 5206–5210.

Paullada, A., Raji, I. D., Bender, E. M., Denton, E., & Hanna, A. (2020). Data and its (dis) contents: A survey of dataset development and use in machine learning research. ArXiv Preprint ArXiv:2012.05345.

Russell, S., & Norvig, P. (Eds.). (2021). Artificial Intelligence: A modern approach. Pearson Education Inc.

Sambasivan, N., Kapania, S., Highfill, H., Akrong, D., Paritosh, P. K., & Aroyo, L. M. (2021). “ Everyone wants to do the model work, not the data work”: Data Cascades in High-Stakes AI. Google Research. https://research.google/pubs/pub49953/

Scheidl, H., Fiel, S., & Sablatnig, R. (2018). Word beam search: A connectionist temporal classification decoding algorithm. 2018 16th International Conference on Frontiers in Handwriting Recognition (ICFHR), 253–258.

Seki, H., Hori, T., Watanabe, S., Moritz, N., & Le Roux, J. (2019). Vectorized Beam Search for CTC-Attention-Based Speech Recognition. INTERSPEECH, 3825–3829.

Yadav, V., & Bethard, S. (2019). A survey on recent advances in named entity recognition from deep learning models. ArXiv Preprint ArXiv:1910.11470.

Zeng, M. L., & Qin, J. (2016). Metadata. Facet.

Zenkel, T., Sanabria, R., Metze, F., Niehues, J., Sperber, M., Stüker, S., & Waibel, A. (2017). Comparison of decoding strategies for ctc acoustic models. ArXiv Preprint ArXiv:1708.04469.