This project aimed to deepen our understanding of the decisions undertaken while creating cyber physical systems (CPS) with autonomous capabilities – and what better way to do so than to create one while documenting the process?

The first stage of this project involved building a quadcopter and programming it to follow a ground robot using computer vision. This taught us a lot about what decisions need to be made over the course of building such systems, and made us much more aware of the importance (and difficulty) of recording and justifying seemingly small decisions such as parameter choices. We didn’t actually have a use for the finished system in mind when we first started! We are now working it into our Masters program to add to the toolkit and skills of the emerging practitioners of the new branch of engineering.

What sparked this project?#

Believe it or not, the idea for this project came from a blog article! Click here to read the article.

The post gave an outline of how to build a drone which would autonomously follow a person using computer vision, but it was sparse on the details, about why decisions were made and about how safety could be factored into the design and decision-making process. We wanted to see what the process would be like if we asked the better questions and built the system with these issues in mind. And we had the means to do it: access to the hardware, test space and expertise (with many thanks to Rob Mahony and his research group, in particular Alex Martin and Hon Ng, and Andrew Tridgell!)

What did we do?#

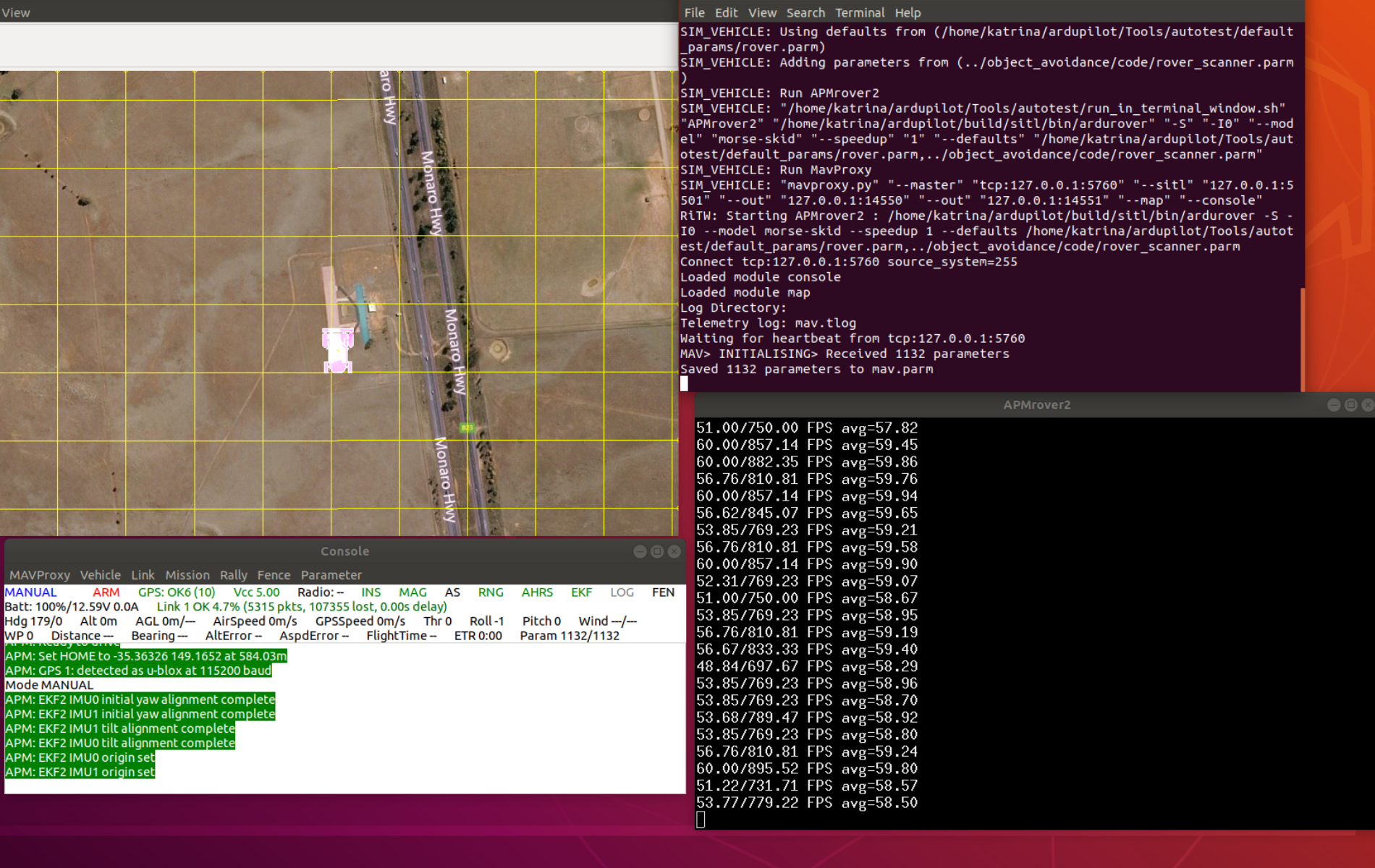

This project changed a lot over its run. We experimented and iterated and where we ended up is certainty different to where we began. One of the first things we realised was that we wouldn’t actually be able to test the system by having it follow a human as it was far too dangerous. So, we pivoted and decided our drone would follow a ground-based robot, or ‘turtlebot’ instead. This brought its own challenges. The object detection following a turtlebot is not as simple as using a pre-trained model. We had to capture images of the turtlebot, annotate them and use them to fine-tune a pre-trained object-detection model. After getting the computer vision side of things working without the flying aspect, we moved to doing a lot of development and testing in simulation before finally bring both aspects together and flying the drone in the real world. As you might imagine, It’s quite easy to crash drones when you’re flying them in a small space, particularly for beginners. And so the next pivot: we would carry out the educational portion of this project in simulation. Not only that, we decided we would further explore object avoidance using the ground robot.

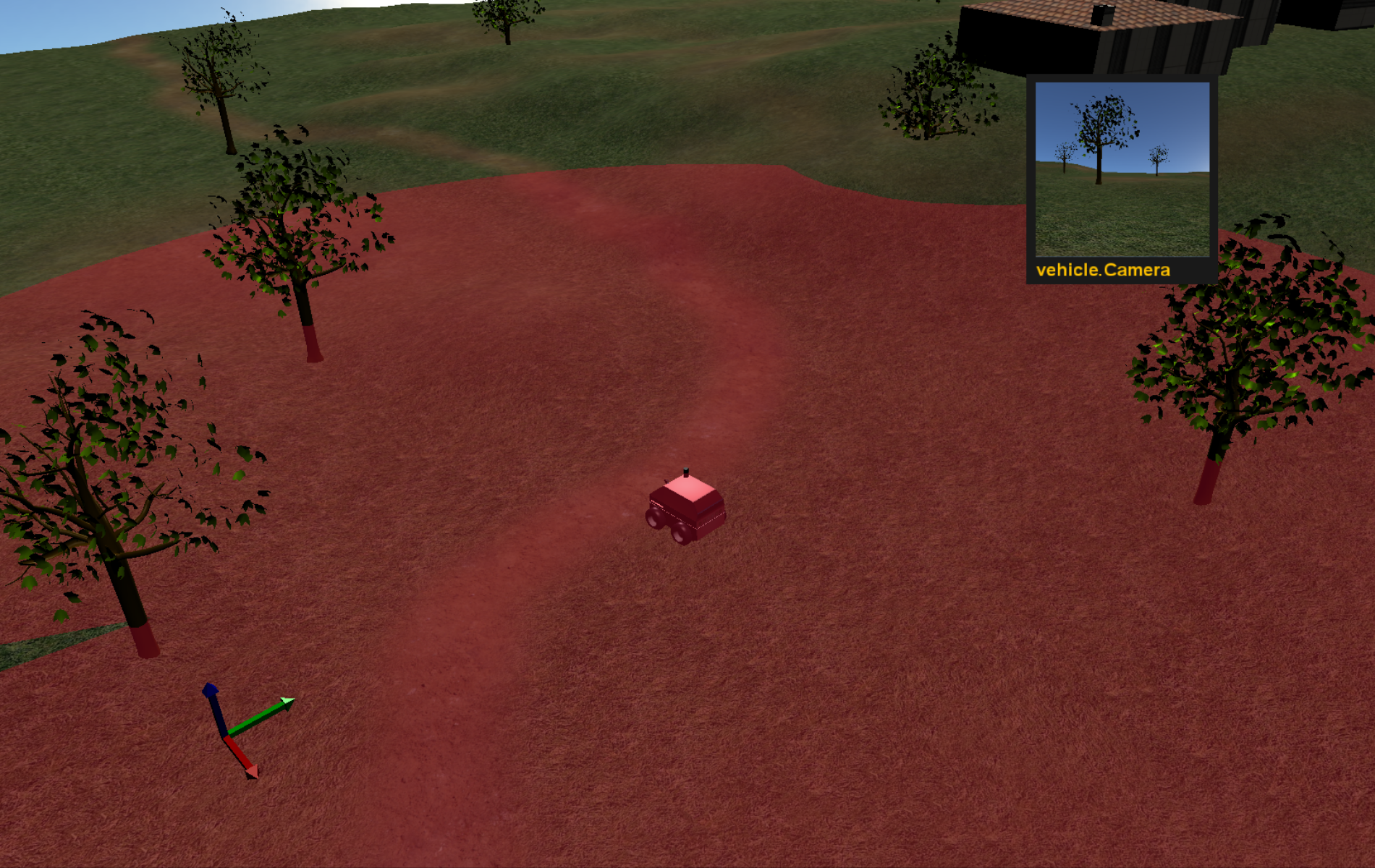

Running the ground robot simulation#

What was the Aha! moment?#

Like many of the current projects at 3Ai, this work was largely exploratory, with the main benefits being the insight gained along the way rather than the system itself.

On the computer vision side, we learnt about trade-offs between inference speed, accuracy and storage space, as well as other factors which can influence model choice (such as whether a trained network is available and for which framework, and for embedded systems how easy it is to get it to work well on the hardware). For the hardware side, one aspect that stood out was the communication between components. This can be difficult to realistically simulate and for real-time systems the difference in performance can be considerable. We also found the process of documenting the process to be quite a challenge and came to realise just how many decisions are involved in the design of a system like this. As it turns out, carefully considering and documenting these decisions takes a lot of time!

We are currently in the process of turning this project into a skills fortnight for 3Ai Masters students which will allow them to learn about ArduPilot, robot simulators and object avoidance. While this project has changed a lot from its initial conception the focus still remains on safety for unmanned vehicles with autonomous capabilities and how this is addressed in the design and programming of such systems.

With thanks to 3Ai Research Assistant Katrina Ashton for capturing this snapshot.